At its annual developer conference last week, Google announced the second release of its conversational AI system, LaMDA, which stands for Language Models for Dialogue Applications. Google says LaMDa 2 is a more finely tuned version of their original release that uses open domain dialogue technology for conversation.

LaMDA is built on Google’s own Transformer neural network, an open source architecture that the company debuted in 2017. LaMDA was trained with dialogue (a dataset of 1.56 trillion words from public web data and documents, to be exact) to allow it to have what the company calls “natural, sensible and specific conversations.” It was then fine-tuned to generate natural language responses to given contexts and then classify its own responses according to whether they are safe and high quality. (More specific training information can be found in this developer blog post.)

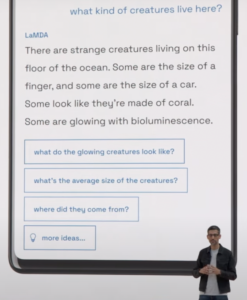

Google CEO Sundar Pichai demonstrates LaMDA. With its “Imagine It” capability, users can input ideas and the AI will generate descriptive responses and even follow-up questions, as seen here.

During his keynote, Google CEO Sundar Pichai queued up pre-made demonstrations of LaMDA 2 that he said were not specific products but “quick sketches” to illustrate LaMDA’s capabilities. There is a creative function called “Imagine It,” where users can input their own ideas and the AI will generate imaginative and relevant descriptions of the idea. He shows LaMDA answering the prompt “Imagine I’m at the deepest part of the ocean” with “You’re in the Mariana Trench, the deepest part of the ocean! The waves are crashing against the walls of your submarine. You are surrounded by total darkness.” This description is followed by a list of follow-up questions generated by LaMDA, such as “What kind of creatures live here?” Ideas can be related to a straightforward topic (like the given example of the Mariana Trench), or a more abstract idea (such as how being on a planet made of ice cream might feel). Another function shown is called “Talk About It,” and this allows users to ask LaMDA 2 questions about a specific subject matter, after which it will stay on-topic, no matter which follow-up questions are asked.

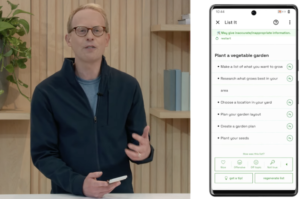

Perhaps the most interesting capability, “List It,” was demonstrated live, this time by Senior Director of Product Management Josh Woodward, who said: “List It explores whether LaMDA can take a complex goal or topic and break it down into relevant subtasks. It can help me figure out what I’m trying to do and generate useful ideas I might not have thought of.”

Woodward tells LaMDA he wants to plant a vegetable garden, and it instantly provides him with a detailed list of subtasks, including “Make a list of what to grow” and “Research what grows best in your area.” Each suggested subtask can be broken down into a list of more suggestions specific to the task. LaMDA can also generate tips, such as trying container growing if yard space is a concern.

Google Senior Director of Product Management Josh Woodward demonstrates LaMDA 2’s list capabilities at Google I/O.

This kind of technology has often exhibited flaws, and Sundar gives the caveat that it has a long way to go. LaMDA can still generate inaccurate, inappropriate, or offensive responses.

“We are at the beginning of a journey to make models like these useful to people, and we feel a deep responsibility to get it right,” Sundar said during his keynote. “And to make progress, we need people to experience the technology and provide feedback.”

Google is gleaning that valuable feedback with its new AI Test Kitchen app, which it also debuted during the keynote and was the driver for Woodward’s demonstration.

The app is only available internally for now, but Pichai mentioned the company may someday grant access in an iterative manner to “a broad range of stakeholders,” including AI researchers, social scientists, and human rights experts. Pichai says this aligns with Google’s AI principles, which include making sure the technology is socially beneficial, is built and tested to be safe, and is held to high standards of scientific excellence.

Related Items:

Google’s Massive New Language Model Can Explain Jokes

Experts Disagree on the Utility of Large Language Models

The post Google Debuts LaMDA 2 Conversational AI System and AI Test Kitchen appeared first on Datanami.

0 Commentaires