The Artificial Intelligence Act was introduced to the European Union in April 2021, and is rapidly progressing through comment periods and rewrites. When it goes into effect, which experts say could occur at the beginning of 2023, it will have a broad impact on the use of AI and machine learning for citizens and companies around the world.

The AI law aims to create a common regulatory and legal framework for the use of AI, including how it’s developed, what companies can use it for, and the legal consequences of failing to adhere to the requirements. The law will likely require companies to receive approval before adopting AI for some use cases, outlaw certain other AI uses deemed too risky, and create a public list of other high-risk AI uses.

At a broad level, the law seeks to codify the EU’s trustworthy AI paradigm, according to an official presentation on the law by the European Commission, the continent’s governing body. That paradigm “requires AI to be legally, ethically, and technically robust, while respecting democratic values, human rights, and the rule of law,” the EC says.

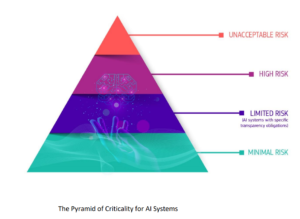

While a lot of the particulars of the new law are still up in air–including, critically, the definition of AI–a key element appears to be its “product safety framework,” which categorizes prospective AI products into four horizontal categories that apply across all industries.

At the bottom of the “pyramid of criticality” are systems with minimal risk, according to a 2021 EC presentation on the new law compiled by Maruritz Kop of the Stanford-Vienna Transatlantic Technology Law Forum. AI uses that fall into this category are exempt from the transparency requirements that riskier systems must pass. In the “limited risk” category are AI systems, such as chatbots, that have some transparency requirements.

The EU’s new AI Act would categorize AI programs into four categories (Source: EU Artificial Intelligence Act: The European Approach to AI, by Mauritz Kop)

The screws begin to tighten in the high-risk AI category, which has strict transparency requirements. According to Kop’s presentation, the high-risk category includes AI applications used in:

- Critical infrastructure, like transport, that could endanger a person’s life or health (such as self-driving cars);

- Educational or vocational training that impacts a person’s educational or professional attainment (such as scoring of exams);

- Safety components of products (like robot-assisted surgery);

- Employment, workers management and access to self-employment (such as resume or cv-sorting software);

- Essential private and public services (such as credit scores);

- Law enforcement use cases (such as evidence evaluation);

- Migration, asylum, and border control management (including evaluation of authenticity of passports);

- Justice and democratic processes (such as applying the law to a concrete set of facts);

- And surveillance systems (such as biometric monitoring and facial recognition).

The fourth category of is “unacceptable risk” AI systems. These applications are essentially outlawed because they carry too much risk. Examples of applications in this category include systems designed to manipulate the behavior or people or “specific vulnerable groups,” social scoring, and real-time and remote biometric identification systems. There are exceptions, however.

The high-risk category is likely where a lot of companies will focus their efforts to ensure transparency and compliance. The EU’s AI Act would recommend that companies take four steps before introducing an AI product or service that falls into the high-risk category.

Facial recognition would be a high-risk use of AI according to the EU’s new AI Act (Source: Shutterstock)

First, there should be an impact assessment and codes of conduct “overseen by inclusive, multidisciplinary teams,” Kop writes in the presentation.

Secondly, the high-risk system must “undergo an approved conformity assessment,” which likely means passing an internal test or audit. Certain AI products in this category will require the audit to be performed by an outside body and also include benchmarking, monitoring, and validation. Any changes to a system in this category will require it to pass another assessment.

Thirdly, the EU will maintain a database of all high-risk systems. Lastly, a “declaration of conformity” must be signed and the CE label must be shown before it can enter the market.

Once a high-risk AI system is in the market, there will be additional steps taken to ensure they do no harm. Kop writes that government authorities “will be responsible for market surveillance” of the AI uses. There will also be end users monitoring and human oversight, as well as a “post-market monitoring system” built and maintained by the AI providers.

“In other words, continuous upstream and downstream monitoring,” Kop writes.

There are specific transparency requirements for certain types of systems, including automated emotion recognition systems, biometric categorization, and deepfake/synthetics. People will also have the right to inspect high-risk AI systems.

Enforcement of the proposed law haven’t been set yet, but it will likely mirror the General Data Protection Regulation (GDPR), which has been in effect for four years. According to Kop’s presentation, violations could incur fines up to 6% of the company’s turnover (or its revenue), or €30 million for private entities.

There would also likely be a grace period before the EU’s AI Act went into effect, just like with the GDPR. The law is currently being drafted, with the latest one due later this month. Experts say it appears the EU is pushing the get the AI Act into law quickly.

Check here tomorrow for an analysis of the law and how it will likely impact companies, including those in the United States.

Related Items:

A Culture Shift on Data Privacy

GDPR and the Transparency Revolution

Understanding AI’s Limitations Is Key to Unlocking Its Potential

The post Europe’s AI Act Would Regulate Tech Globally appeared first on Datanami.

0 Commentaires