In the past six months, 20% of companies have faced two or more severe data incidents that directly impacted the business’s bottom line, according to a new report on data quality issued today by Bigeye, a provider of data observability solutions.

That more data quality problems are being encountered should not be surprising. Absent some extraordinary decline in the rate of data errors, the simple fact that companies are collecting and processing more data and relying more on data to power critical business processes means that more data problems are likely to be encountered.

But how bad has the data quality problem become, and what impact is it having on the business? Those are questions Bigeye hopes to quantify with its “State of Data Quality” report.

To gather data for its study, Bigeye surveyed 100 data decision-makers, which included more than 60 respondents who use big cloud data warehouse installations that cost $500,000 or more per year. The company questioned the respondents on the rate and severity of data quality issues. The results are not encouraging.

For example, 70% of Bigeye survey respondents report having at least two data incidents that have diminished the productivity of their teams. More than half (52%) report experiencing more than five data issues over the past three months. The report concludes that companies are experiencing a median of five to 10 data quality incidents per quarter, while 15% experienced more than 15 incidents.

A full 40% of respondents report having two or more “severe” data incidents in the past six months that threatened to hurt revenues or profits. By some miracle of data, half of those respondents managed to avoid the wrath of the data quality gods, while the other half actually succumbed to the deleterious effects of poor data quality, which garnered unwanted attention from the C suite.

“Organizations with more than five data incidents a month are essentially lurching from incident to incident, with little ability to trust data or invest in larger data infrastructure projects,” the Bigeye report states. “They are largely performing reactive over proactive data quality work.”

Big Eye, which emerged from Uber in 2019, found that detecting and resolving data quality issues continues to be difficult, with the process taking anywhere from one to two days to several months to complete. This is largely due to the large variety of sources of errors (i.e. upstream data entry errors vs. data ingestion failures vs. server or network failures).

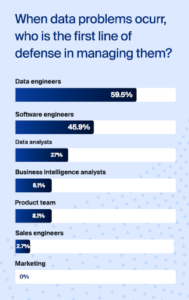

Who is responsible for finding and fixing data quality problems? Well, it depends on the organization and its structure. In some companies, it’s the responsibility of the data engineers, while in others, it’s software engineers. Folks with the title of data analysts or data scientists, surprisingly, are not as big a part of the data quality game.

“Our survey found that data engineers are the first line of defense in managing data issues, followed closely behind by software engineers,” the report says. Data analysis and BI analysts were third and fourth, respectively. Data scientists didn’t make the cut.

While Bigeye didn’t ask (or didn’t disclose) what percentage of its survey-takers used an automated third-party data quality monitoring solution, it’s clear from the survey that such solutions are having a big impact. The vendor says respondents who used automated third-party data quality monitoring solution report spending less time manually monitoring data quality. Four in 10 respondents said such solutions saved them 30% or more of their time.

Bigeye and other data observability vendors make tools designed to detect data problems in ETL/ELT pipelines, but these are merely patches. The ultimate solution to data quality problems, Bigeye says, is better data governance.

However, that can be a tough sell, particularly in today’s diverse, decentralized, and fast-moving data environments.

“In other words, data quality is the ultimate tragedy of the commons,” Bigeye says in its report. “When each data user or producer simply acts in their own self-interest, they’re incentivized towards actions like duplicating tables and producing untidy data, actions that complicate and deteriorate the data product.”

Having come from industry, Bigeye’s CEO and Co-founder Kyle Kirwan was not surprised to learn that data quality is having such a big impact on companies. But putting numbers to a problem has a way of refining one’s perception.

“Coming from a data team before starting Bigeye, I knew anecdotally how much of a burden data quality and pipeline reliability issues were, but the results we got back when we ran this survey really confirmed my experience: they’re one of the biggest blockers to these teams from being successful,” Kirwan says in a press release. “We’re hearing that around 250-500 hours are lost every quarter dealing with data pipeline issues.”

You can download a copy of the report here.

Related Items:

Bigeye Introduces New ‘Metadata Metrics’ Observability Solution

Bigeye Observes $45 Million in Funding

Bigeye Spawns Automated Data Quality Monitoring from Uber Roots

The post Bigeye Sounds the Alarm on Data Quality appeared first on Datanami.

0 Commentaires