Data scientists want to do data science. It’s right there in the title, after all. But data scientists often are asked to do other things besides building machine learning models, such as creating data pipelines and provisioning compute resources for ML training. That’s not a good way to keep data scientists happy.

To keep Netflix’s 300 data scientists happy and productive, in 2017 the team leader for the Netflix machine learning infrastructure team, Savin Goyal, led development of a new framework that abstracts away some of these less data science-y activities and allow them to focus more of their time on data science. The framework, called Metaflow, was released by Netflix as an open source project in 2019, and has been widely adopted since.

Goyal recently sat down with Datanami to talk about why he created Metaflow, what it does, and what customers can expect from an enterprise version of Metaflow at his startup, Outerbounds.

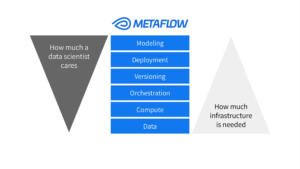

“If you want to do machine learning, there are a few raw ingredients that are needed,” Goyal says. “You need some place to store and manage your data, some way to access that data. You need some place to orchestrate compute for your machine learning models. Then there are all the concerns around MLops–versioning, experiment tracking, model deployment and whatnot. And then you need to figure out how is the data scientist actually authoring their work.

“So all of these raw building blocks in many ways exist, but then it’s still sort of left up to a data scientist to cross the barriers from one tool to the other,” he continues. “So that’s where Metaflow comes in.”

Metaflow helps by standardizing many of these processes and tasks, thereby allowing a data scientist to focus more on machine learning activities using Python or R, or any framework. In other words, it makes the data scientist “full stack,” Goyal says.

“It’s really important for a company like Netflix to provide a common platform for all its data scientists to be a lot more productive,” he says. “Because one of the big issues that bites most enterprises is that, if you have to deliver value with machine learning, not only do you need people who are great with data science, but you also need to enable folks to navigate internal system complexity.”

With Metaflow in place, Netflix’s data scientists (they called themselves machine learning engineers) don’t have to worry about how to connect to various internal data sources, or how to get access to large compute instances, Goyal says. Metaflow automates many of the aspects of running training pipelines or inference pipelines at scale on Netflix’s cloud platform, which is AWS.

In addition to automating access to data and compute, Metaflow also provides MLOps capabilities that help data scientists document their work through code snapshots and other capabilities. According to Goyal, the ability to reproduce results is one of the big benefits provided by the framework.

“One of the things that lacks in machine learning traditionally is problem reproducibility,” he says. “Let’s say you’re a data scientist and you’re training a model. Oftentimes, nobody else would be able to reproduce your results. Or if you’re running into an issue, nobody would be able to reproduce that issue to debug that. So we basically provide guarantees around reproducibility that then enables people to share results within teams, and that fosters collaboration.”

Metaflow also lets users mix and match different cloud instance types within a given ML workflow, which helps them to reduce costs, Goyal says. For example, say a data scientist wants to train an ML model using a large data set housed in Snowflake. Since it’s a lot of data, it needs to be go through a memory-intensive analysis process first, he says. Then the data scientist may want to train models on GPUs. Following the compute-intensive training process, deploying the model for inference requires fewer resources.

“You can basically carve out different sets of your workflow to run on different instance types and different resources,” Goyal says. “That further lowers your overall cost of training a machine learning model. You do not want to pay for a GPU instance while you’re only doing something that’s memory-intensive and doesn’t really engage the GPU.”

Metaflow allows data scientists to use their development tool or framework of choice. They can continue to run TensorFlow, PyTorch, scikitlearn, XGBoost, or any other ML framework they want. While there is a GUI for Metaflow, the primary method of interaction with the product is by including decorators in their Python or R code. At runtime, the decorators determine how the code with execute, Goyal says.

“We are basically targeting people who know data science,” he says. “They don’t want to be taught data science. They’re not looking for a no-code, low-code solution. They’re looking for a solution that firmly puts them in control while abstracting away all infrastructure concerns. That’s where Metaflow comes in.

Since Netflix initially released Metaflow to open source in 2019, it’s been widely adopted by hundreds of companies. According to the project’s website, it’s being used by Goldman Sachs, Autodesk, Amazon, S&P Global. Dyson, Intel, Zillow, Merck, Warner Media, and Draft Kings. CNN, another user, reports seeing an 8x performance boost in terms of the number of models they put into production over time, Goyal says.

The open source project on GitHub has 7,000 stars, putting it in the top one or two projects in this space, he says. The Slack channel is quite busy, with about 3,000 active members, he says. Since being released initially for AWS, Metaflow has since been adapted to work on Microsoft Azure and Google Cloud, as well as Kubernetes, Goyal says. It also has been used with hosted clouds from Oracle and Dell.

In 2021, Goyal co-founded Outerbounds with Ville Tuulos, a former Netflix colleague, and Oleg Avdeev, who hailed from MLOps vendor Tecton. Goyal and his team at the San Francsico-based company continue to be the primary developers of the open source Metaflow project. Four months ago, Outerbounds launched a hosted version of Metaflow that allows users to get up and running very quickly on AWS.

Because Outerbounds is controlling how the infrastructure is deployed, it’s able to offer guarantees around security, performance, and fault-tolerance with its managed offering compared to the open-source version, Goyal says.

“In open source, we have to make sure that our offering works for every single user out there who wants to use us. In certain specific areas, that’s sort of easier said than done,” he says. “If you’re using our managed offering then we can afford to take certain very specific opinions” about the deployment.

Reducing cloud spending is a big focus for the Outerbounds offering, particularly with the scarcity and expense of GPUs these days. Eventually, the company plans to enable customers to tap into the power of GPUs residing on-prem, provided there is some sort of connection to the hyperscaler of choice.

“As you start scaling machine learning models, things start getting expensive quite quickly and there are a lot of mechanisms to lower that cost quite a bit,” Goyal says. “Do I know that my GPUs are not data starved, for example. How can we make sure that you’re able to move data with the highest throughput possible? That’s more than cloud providers would provide you.”

Related Items:

The Future of Data Science Is About Removing the Shackles on AI

Birds Aren’t Real. And Neither Is MLOps

A ‘Breakout Year’ for ModelOps, Forrester Says

The post How to Manage ML Workflows Like a Netflix Data Scientist appeared first on Datanami.

0 Commentaires