Sure, you could stitch together your own data management tools and run it on a lakehouse outfitted with your choice of data processing engines. Or you could buy a pre-built data fabric pre-integrated atop a lakehouse architecture from one of the tech giants that recently introduced such offerings. The choice is up to you.

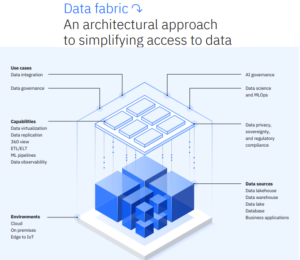

Data fabrics have been growing in popularity over the past few years as an architectural element for re-centralizing the management of data amid the relentless growth of isolated data silos. A traditional data fabric will bring together, at the metadata level, various data management tools, including ETL, governance, lineage tracking, a data catalog, and access control, with the goal of making it easier for administrators to grant their users access to disparate data silos in controlled, non-chaotic manner.

Many larger companies have built their own data fabrics by integrating various best-of-breed point products together. A few data management tool vendors have also offered their own suites, including vendors like Informatica, IBM, Talend, and others. See this story to read how Forrester analyst Noel Yuhanna (who’s credited with coining the term “data fabric”) sizes up the market.

But a new data fabric push from IBM, HPE, and Microsoft indicate that the market may be ready for pre-built data fabrics. Over three consecutive weeks in May, Microsoft, HPE, and IBM each unveiled new data fabric offerings or updated existing data fabrics with new lakehouse capabilites designed to make it easy to integrate and analyze big data sets without giving up centralized control and security in hybrid cloud environments.

IBM kicked off this spring’s data fabric rush with the unveiling of watsonx at its THINK conference on May 9. Watsonx.data is technically a lakehouse that utilizes a cloud-based object store running in AWS or the IBM Cloud, along with Presto and Apache Spark engines for data processing (and legacy Db2 and Netezza engines for existing customers). Apache Iceberg provides data consistency. The watsonx.data lakehouse is closely linked with the IBM Cloud Pak for Data, which fills more of a traditional data fabric role, with built-in capabilites for governance, integration, privacy, and security.

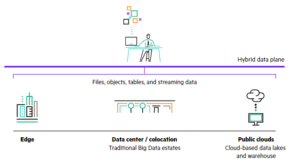

A week later, HPE unveiled an update to Ezmeral Data Fabric on May 16. The updated data fabric is based on MapR’s technology and features S3, Posix, and Kafka storage, along with support for Iceberg and Delta, which is Databricks’ table format. The big news was HPE linked Ezmeral Data Fabric to its new Unified Analytics, which features “Kubernetized” versions of Spark, Apache Superset, Apache Airflow, Feast, Kubeflow, MLFlow, Presto SQL, and Ray. The engines are isolated in containers to limit their respective “blast radii,” a lesson learned from the Hadoop days.

HPE Ezmeral Data Fabric Software combines files, objects, tables, and steramign data into a unified data plane (Source: HPE)

A week after that, Microsoft debuted Microsoft Fabric on May 23. The offering, together with OneLake (the new name of its data lakehouse offering), is designed to serve as a one-stop shop for all of an organization’s data management, analytic, and machine learning needs. On the data management front, Microsoft Fabric brings data governance, ETL, data discovery, sharing, lineage, and compliance management. Data is stored in Delta–a nod to Microsoft’s closer partnership with Databricks–while various data warehousing and AI products from the Azure cloud (not to mention Databricks’ engines) can be brought to bear on the data.

Manish Patel, the co-founder and CPO of data connectivity provider CData Software, recently provided Datanami with some insight into the announcement. He says they show customers are ready for an easier onramp into big data, and vendors are ready to provide it to them.

“I think what IBM, HP, Microsoft and others are trying to do is say, you don’t need to go and do this across multiple products, multiple technologies, learn multiple ways of doing things where you can pretty much do it in a singular way with singular domain knowledge,” Patel says.

“I think it’s a concerted effort by the likes of these larger companies and larger organizations to basically say, we can simplify this for you,” he continues. “We’re going to give you one way of doing things in the technology you understand, that you already bought into as part of your organization or spend. Why look elsewhere?”

The fact IBM, HPE, and Microsoft made such similar data fabric and lakehouse announcements indicate there is strong market demand, Patel says. But it’s also partly a result of the evolution of data architecture and usage patterns, he says.

Microsoft Fabric, in combination with OneLake, is designed to provide a one-stop shop for most data, analytic, and AI needs (Image courtesy Microsoft)

“I think there are probably some large enterprises that decide, listen, I can’t do this anymore. You need to go and fix this. I need you to do this,” he says. “But there’s also some level of just where we’re going…We were always going to be in a position where governance and security and all of those types of things just become more and more important and more and more intertwined into what we do on a daily basis. So it doesn’t surprise me that some of these things are starting to evolve.”

While some organizations still see value in choosing the best-of-breed products in every category that makes up the data fabric, many will gladly give up having the latest, greatest feature in one particular area in exchange for having a whole data fabric they can move into and be productive from day one.

That may be due to the continued maturity of data fabric solutions and the recognition that this is a valuable data access pattern. It may also be a side effect of the economic uncertainty and a greater scrutiny on IT spending, particularly in the cloud, Patel says.

“I think in the heyday, it was nice to be able to say ‘Hey, I have a product that does XY and Z more, or XY and Z better,’ because maybe it was a differentiator or maybe it was providing value,” he says. “But once you get into this cost scrutiny, I think people start having to retrench from some of those ideas…It’s a rebalancing of spend as opposed to a completely retrenchment in all spend.”

Patel sees Microsoft Fabric as a potential way for Microsoft to elevate itself above the other hyperscalers and to leverage its established dominance in productivity software via Office 365.

“I think…Microsoft’s ability to be able to talk to a captive audience and their ability to benefit from the existing relationships that they have with a lot of these large enterprises, and the connectivity into day-to-day tools like Office 365, Teams etc. that I think just might give them the edge,” he says. “This connected experience across the enterprise is something they’re pretty uniquely positioned to do, at least in my mind.”

Related Items:

HPE Brings Analytics Together on its Data Fabric

Microsoft Unifies Data Management, Analytics, and ML Into ‘Fabric’

IBM Embraces Iceberg, Presto in New Watsonx Data Lakehouse

The post All-In-One Data Fabrics Knocking on the Lakehouse Door appeared first on Datanami.

0 Commentaires