Have you heard the buzz about the predicted death of hard disk drives (HDDs)? Some have gone all in on projections that the growth of SSD deployments will eliminate demand for HDDs within five years. Other industry analysts size the market differently. Having seen IT leaders and their partners deploy successful Big Data applications using my company’s software on either SSDs or HDDs – and sometimes both – the subject could use some balanced perspective.

Big Data workloads involve the processing, storage, and analysis of massive volumes of data that exceed the capabilities of traditional data management tools and techniques. They often require specialized technologies, such as distributed computing frameworks, to efficiently manage and derive insights from the data. These workloads are commonly found in industries where the ability to process and extract valuable insights from large and complex datasets is crucial for making informed decisions.

Many IT team leads often presume that Big Data workloads implicitly require more costly solid-state drives (SDD) for storage. Vendor hype on the subject has grown such that it’s obscuring a more nuanced reality – especially given innovation in multiple vendors’ hard drive density and performance.

In general, SSDs can enable new levels of performance and efficiency in big data workloads by significantly improving data access and processing speeds, reducing latency, and supporting the requirements of modern data-intensive applications. This is certainly true in some cases – but not all. Even though hard disk drive (HDD) shipments recently fell 20% quarter-over-quarter, the stocks of makers of disk drives, memory chips and semiconductor-manufacturing equipment are on the rise – some significantly. While there are a few who argue that the HDD is dying, the reality is that SSDs can’t totally replace this storage medium. In fact, there are many circumstances where HDD is a better fit.

Stay Balanced: Consider When HDDs May Make More Sense Than Flash

The first instance where HDD may be a better option is sequential workloads (IO). Flash does have superior random IO characteristics compared to HDDs with comparable density. In a transaction processing system – querying client accounts and balances in a database, for instance– this is advantageous for applications whose duties include reading small amounts of data on a random access basis.

However, the performance gap between flash and HDD for IO is far less significant. Sequential workloads scan larger data files from first to last byte, such as when creating or reading a huge medical image like an MRI or while backing up or restoring a backup file, or for streaming video.

In terms of price per gigabyte, flash media and HDD media still differ by a factor of five today. For years, “flash at cost parity with HDD” has been promised; nonetheless, it always seems to be five years away.

Another situation that favors HDDs is petabyte scale with unstructured data. High-density SSDs can’t completely replace HDDs in terms of performance and cost, particularly when it comes to petabyte-scale unstructured data storage. HDDs have been mainly used in storage systems designed for petabyte-scale unstructured data (mostly file and object storage) due to their high density, performance qualities, and dependability, as well as – and perhaps most crucially – their low cost per gigabyte of capacity.

For the foreseeable future, HDDs will continue to be a viable option for many other petabyte-scale unstructured data workload profiles, particularly when cost – in terms of both dollar per terabyte and price/performance) are considerations.

Consider Performance Degradation Concerns

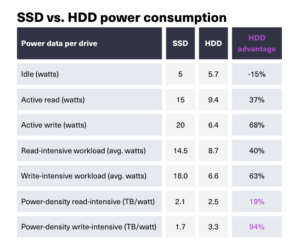

For an explanation of how these figures were calcluated, click here

The performance of an SSD starts to suffer when it’s about 50% full. Accordingly, capacity should be kept below 70% for the sake of performance. What does this imply for users in general? Flash storage solutions operate at their absolute peak when first deployed, with performance deteriorating over time as the system fills up. To further increase performance, some astute administrators even turn to bespoke “over-provisioning” levels on the SSD. However, this actually limits the drive’s available data storage capacity and raises the true cost.

Not all large-scale, mission-critical operations require quad-level cell (QLC) flash-based data storage. QLC flash is not a good fit for the majority of other workloads, even backups, which are at the core of contemporary data and ransomware security methods. However, it has a place with read-intensive, latency-sensitive workloads that justify its bigger price tag.

SSDs Are Better Suited for Read-Intensive Operations

It’s important to consider the durability and reliability requirements of application demands. The added durability and reliability of QLC or other high-density flash for use with “higher churn” workloads must be called into question. In technical terms, the drive has much lower write/overwrite ratings as the number of cells in the flash media grows (such as switching from TLC to QLC), based on the industry’s “Program/Erase Cycles.” In general, flash has worse P/E cycles than HDDs, and QLC in particular. These churn properties of backup/restore workloads raise concerns about the long-term viability of QLC in this use case.

However, SSDs/QLC are often a better choice for other applications, such as content distribution and media streaming. Content distribution and media streaming involve predominantly read-intensive operations, where data retrieval speed is crucial. QLC SSDs provide fast read speeds, low latency, and efficient data access, supporting seamless content delivery to end-users.

Sustainability: Don’t Assume SSDs Are Greener

Recently, industry talks have suggested that flash should generally outperform a spinning medium like HDD. This would seem to make sense because HDDs need electrical motors to move actuator arms and spin mechanical platters, but SSD flash doesn’t.

Yet our recent analysis found that in some cases high-density SSDs do not always outperform HDDs in power density or usage. Of course, drive densities are likely to increase in the next few years, so this result could change over time. Additionally, according to the balanced workload profile used in the research, HDDs offer a 19% higher power density than SSDs. The advantage for HDDs increases to 94% for the workload profile with a high write demand. Increased density may offset the presumed sustainability advantage of SSDs across a variety of workloads.

SSDs in the Big Data Landscape

The role of SSDs in Big Data workflows is more nuanced than often assumed. While SSDs certainly enhance performance, their necessity for all scenarios is not a given. As the AI sector surges, HDDs are reemerging as contenders, particularly for sequential workloads and petabyte-scale unstructured data. Durability concerns challenge the suitability of QLC for specific high-churn tasks, raising questions about its long-term viability. In parallel, the myth of SSDs’ inherent environmental superiority has been debunked.

In a landscape of changing demands, IT leaders are wise to let the workflow be their guide when discerning an HDD vs. SSD deployment. Careful discernment of an SSDs’ applicability becomes vital for an informed and sustainable data infrastructure decision.

About the author: Wally MacDermid is vice president of strategic alliances at Scality, a hardware-agnostic storage software firm whose solutions help organizations build reliable, secure and sustainable data storage architectures on both HDD and SSD infrastructures. An executive leader who has held diverse technical, strategic alliance and business development roles in both startups and large organizations, Wally has spent 20 years building solutions with partners worldwide that maximize customer results. His depth of expertise in cloud, storage, and networking technologies helps organizations solve their biggest data center challenges — growth, security and cost. You can contact Wally by email here.

Scality, a hardware-agnostic storage software firm whose solutions help organizations build reliable, secure and sustainable data storage architectures on both HDD and SSD infrastructures. An executive leader who has held diverse technical, strategic alliance and business development roles in both startups and large organizations, Wally has spent 20 years building solutions with partners worldwide that maximize customer results. His depth of expertise in cloud, storage, and networking technologies helps organizations solve their biggest data center challenges — growth, security and cost. You can contact Wally by email here.

Related Items:

Protecting Your Data in the SSD Era Without Compromise: What You Need to Know

Object and File Storage Have Merged, But Product Differences Remain, Gartner Says

The Object (Store) of Your Desire

The post Are SSDs Required for Your Big Data Workflow? The Answer May Surprise You appeared first on Datanami.

0 Commentaires