Data scientists and others who work in pandas may be interested to hear about a new release of Nvidia’s RAPIDS cuDF framework that it says results in a 150x performance boost for pandas running atop a GPU.

Pandas is a popular Python-based dataframe library that is used for data manipulation and analysis. Developed and released as open source by Wes McKinney, a 2018 Datanami Person to Watch, Pandas is used by an estimated 9.5 million developers around the world.

That number may increase following today’s update to RAPIDS from Nvidia. One of the components of RAPIDS is cuDF, a Python GPU dataframe library built on Apache Arrow (co-developed by McKinney) that offered a pandas-like API for loading, filtering, and manipulating data. With today’s release of RAPIDS version 23.10, cuDF has been updated to enable pandas code to run unchanged in a GPU-accelerated environment.

The new pandas accelerator mode enables the unchanged pandas code to run in a unified CPU/GPU environment and achieve performance gains of up to 150x compared to a CPU-only environment, Nvidia Product Marketing Manager Jay Rodge, Senior Technical Product Manager Nick Becker, and Senior Software Engineer Ashwin Srinath wrote in a blog post today.

“cuDF has always provided users with top DataFrame library performance using a pandas-like API,” they wrote. “However, adopting cuDF has sometimes required workarounds.”

For instance, pandas functionality that had not been implemented or supported in cuDF could not benefit from the GPU-accelerated computing, they wrote. Another dealbreaker was designing separate code paths for GPU and CPU execution, as was manually switching between cuDF and pandas when interacting with other PyData libraries, they said.

“This feature was built for data scientists who want to continue using pandas as data sizes grow into the gigabytes and pandas performance slows,” the engineers wrote. “In cuDF’s pandas accelerator mode, operations execute on the GPU where possible and on the CPU (using pandas) otherwise, synchronizing under the hood as needed. This enables a unified CPU/GPU experience that brings best-in-class performance to your pandas workflows.”

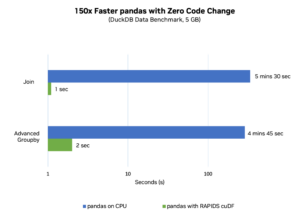

Nvidia said it benchmarked the performance increase with DuckDB’s new version of H2O.ai’s Database-like Ops Benchmark. The benchmark was performed on a 5GB data set, and contained a join and an advanced group-by. Pandas running on CPU took an average of about 5 minutes and 7 seconds to perform the two tasks, whereas it took an average of about 1.5 seconds to perform the two tasks on pandas accelerated with RAPIDS cuDF, according to the company’s chart.

GPU-accelerated pandas is available now as a beta in open source RAPIDS version 23.10. It will be added to Nvidia AI Enterprise soon, the company says.

Related Items:

Inside Pandata, the New Open-Source Analytics Stack Backed by Anaconda

Spark 3.0 to Get Native GPU Acceleration

Starburst Brings Dataframes Into Trino Platform

The post Pandas on GPU Runs 150x Faster, Nvidia Says appeared first on Datanami.

0 Commentaires