Snowflake is holding its Data Cloud Summit 24 conference next week, and the company is expected to make a slew of announcements, which you will be able to find on these Datanami pages. But among the most closely watched questions is how far Snowflake will go in embracing the Apache Iceberg table format and opening itself up to outside query engines? And is it possible that Snowflake may try to “out open” its rival Databricks, whose conference is the following week?

Snowflake has evolved considerably since it burst onto the scene a handful of years ago as a cloud data warehouse. Founded by engineers experienced in developing analytics databases, the company delivered a top-flight data warehouse in the cloud with full separation of compute and storage, which was truly novel at the time. Companies frustrated with Hadoop flocked to Snowflake, where they found a much more welcoming and friendly user experience.

But while Snowflake lacked the technical complexity of Hadoop, it also lacked the openness of Hadoop. That was a tradeoff that many customers were willing to make back in 2018, when frustration with Hadoop was nearing its peak. But customers perhaps are not so willing to make that tradeoff in 2024, particularly as the high cost of cloud computing has become an issue with many CFOs.

Snowflake executives initially boasted about the increased revenue that its lock-in created, but soon it learned that customers were genuinely concerned about being locked in to a proprietary format. It took concrete actions to address the lock-in in February 2022, when it announced support for Apache Iceberg, although Snowflake has yet to make Iceberg support generally available.

Iceberg, of course, is the open table format developed at Netflix to address data correctness concerns when accessing data stored in Parquet files using multiple query engines, including Hive and Presto. The metadata managed by table formats like Iceberg provide ACID transactionality to data interactions, alleviating concerns that queries would return incorrect data.

Iceberg wasn’t the first table format–that honor goes to Apache Hudi, which engineers developed at Uber to manage data in their Hadoop stack. Meanwhile, the folks at Databricks created their own table format called Delta in 2017 and named the data platform it created the data lakehouse.

Two years into the Iceberg experiment, Snowflake has some decisions to make. While it allows customers to store data in externally managed Iceberg tables, it doesn’t offer much of the data management capabilities that you would expect from a full-fledged data lakehouse–i.e. things like table partitioning, data compaction, and cleanup. Will the company announce those during Data Cloud Summit this week?

If Snowflake goes all-in on Iceberg, it would help differentiate it from Databricks, which has put its chips on Delta (although it announced some capabilities to support Iceberg and Hudi with its Universal Format unveiled last June). The community seems to be coalescing around Iceberg from a popularity viewpoint, which could bolster Snowflake’s position as a top-tier Iceberg-based data lakehouse.

Another question is whether Snowflake will allow external SQL engines to query Iceberg data that it manages. Snowflake’s proprietary SQL engine is highly performant when run on data stored in the original Snowflake table format, and the company has benchmark results to back that up. But Snowflake doesn’t provide a lot of options when it comes to querying data with other engines.

Snowflake offers the Snowpark API, which lets you express queries using Python, Java, and Scala, but this is more designed for data engineering and building machine learning models than SQL query processing. It also offers an Apache Spark connector that lets you read from and write to Snowflake using Spark 3.2 through 3.4 (it also offers an Apache Kafka connector). But what customers may really want is the ability to run another SQL query engine against their data.

Snowflake returns to San Francisco for Data Cloud Summit 24

One individual who will be closely watching Snowflake next week is Justin Borgman, the CEO and founder of Starburst, which does a lot of work developing the Trino query engine that forked from Presto and runs its own lakehouse offering that supports Iceberg and Trino. Borgman notes that many of the first workloads run by Netflix after creating Iceberg used Presto.

“We feel like it kind of resets the playing field,” Borgman says of the impact of Iceberg. “It’s almost like the battlefield moves from this very traditional ‘get data ingested into your proprietary database and then you have your customer locked in,’ to more of a free for all where the data is unlocked, which is undoubtedly best for customers. And then we’ll fight it out at the query engine layer, the execution layer rather than at the storage layer. And I think that’s just a really interesting development.”

Borgman is understandably eager to be able to get into Snowflake’s massive 9,800-strong customer base via Iceberg. He claims benchmark tests show Trino outperforming Snowflake’s query engine on Iceberg tables while being about one-third of the cost. Starburst has a number of large customers, such as Lyft, LinkedIn, and Netflix, using the combination of Trino and Iceberg, he says.

Snowflake could go all-in on Iceberg and open itself up to external query engines, but it could still exercise some control over customer workloads through other means. For instance, it could require that customers access data through its data catalog, Borgman predicts.

“They may try to lock you in on a few peripheral features,” he tells Datanami. “But I think at the end of the day, customers won’t tolerate that. I think Iceberg is undoubtedly in their best interests, and I think that they’re going to just start moving tons of data into Iceberg format from where they have the opportunity to choose a different query engine.”

If Snowflake does go fully open and allows external query engines such as Trino, Presto, Dremio, and even Spark SQL to access data that it manages for customers in Iceberg tables, Snowflake customers won’t likely move all their data to Iceberg at once, says Borgman, who was a 2023 Datanami Person to Watch. They’ll likely move their lowest SLA (service level agreement) queries into Iceberg first, while keeping the more important data in Snowflake’s native format and use Snowflake’s native query engine, which is faster but more expensive.

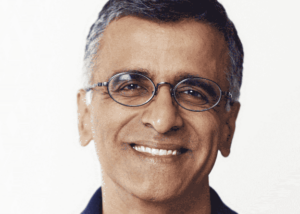

That sets up an interesting dynamic where Snowflake could potentially be hurting its potential to generate revenues while giving customers what they want, which is more openness. But on the flip side, customers may actually reward Snowflake by moving more data into Iceberg and letting Snowflake manage it for them. That could generate higher revenues for the Bozeman, Montana company, although probably not at the same per-customer rate that if they kept all the data locked into a proprietary format. That’s a rate-of-growth factor that new Snowflake CEO Sridhar Ramaswamy will have to account for.

When Ramaswamy replaced Frank Slootman in February, it was expected that the company would shift some focus to AI, where it was seen as trailing its rival Databricks. The company’s April launch of its Arctic large language models (LLMs) shows the company is able to move quickly on that front, but it’s core competitive advantage over Databricks remains with SQL-based analytics and data warehousing workloads.

The changing nature of data warehousing presents both challenges and opportunities for Snowflake. “Basically, the data warehouse now is fully decoupled,” Borgman says. “Snowflake talked a lot about that 12 to 15 years ago, whenever they first came out, of separation of storage and compute. But the key was it was always their storage and their compute.”

Whatever Snowflake chooses to do next week, the cloud giants will undoubtedly be watching. So far, they haven’t really picked sides in the open table format war that’s being waged between Iceberg and Delta, with Hudi in a distant third (although the Apache XTable format developed by Hudi-backer Onehouse threatens to make it all moot). If the market solidifies behind Iceberg, AWS, Microsoft Azure, and Google Cloud could try to cut out the middleman by offering their own soup-to-nuts data lakehouse offering.

Borgman says this feels like a replay of the mid 2010s, when Teradata’s large data warehousing installed base was slowly eaten into by Hadoop, but with one big difference.

“I think you’re going to see a similar sort of model play out where customers are motivated to try to reduce their data warehouse costs and using more of this lake model,” says Borgman, who was the CEO of Hadoop software vendor Hadapt when it was acquired by Teradata in 2014. “But I think one thing that’s different this time around is that the engines themselves, like Trino and [Presto], have improved dramatically over what Hive or Impala was back then.”

Related Items:

Snowflake Looks to AI to Bolster Growth

Onehouse Breaks Data Catalog Lock-In with More Openness

Teradata Acquires Revelytix, Hadapt

The post How Open Will Snowflake Go at Data Cloud Summit? appeared first on Datanami.

0 Commentaires