With the National Institute of Standards and Technology (NIST) set to publish the first Post Quantum Cryptography (PQC) Standards in a few weeks, attention is shifting to how to put the new quantum-resistant algorithms into practice. Indeed, the number of companies with practices to help others implement PQC is mushrooming and contains familiar (IBM, Deloitte, et al.) and unfamiliar names (QuSecure, SandboxAQ, etc.).

The Migration to Post-Quantum Cryptography project, being run out of NIST’s National Cybersecurity Center of Excellence (NCCoE), is running at full-tilt and includes on the order of 40 commercial participants.

In its own words, “The project will engage industry in demonstrating use of automated discovery tools to identify all instances of public-key algorithm use in an example network infrastructure’s computer and communications hardware, operating systems, application programs, communications protocols, key infrastructures, and access control mechanisms. The algorithm employed and its purpose would be identified for each affected infrastructure component.”

Getting to that goal remains a WIP that started with NIST’s PQC program in 2016. NIST scientist Dustin Moody leads the PQC project and talked with HPCwire about the need to take post quantum cryptography seriously now, not later.

“The United States government is mandating their agencies to it, but industry as well as going to need to be doing this migration. The migration is not going to be easy [and] it’s not going to be pain free,” said Moody, whose Ph.D. specialized in elliptic curves, a commonly used base for encryption. “Very often, you’re going to need to use sophisticated tools that are being developed to assist with that. Also talk to your vendors, your CIOs, your CEOs to make sure they’re aware and that they’re planning for budgets to do this. Just because a quantum computer [able to decrypt] isn’t going to be built for, who knows, maybe 15 years, they may think I can just put this off, but understanding that threat is coming sooner than than you realize is important.”

Estimates vary wildly around the size of the threat but perhaps 20 billion devices will need to be updated with PQC safeguarding. NIST has held four rounds of submissions and the first set of standards will encompass algorithms selected the first three. These are the main weapons against quantum decryption attack. The next round seeks to provide alternatives and, in some instances, somewhat less burdensome computational characteristics.

The discussion with Moody was wide-ranging, if perhaps a little dry. He covers PQC strategy and progress and the need to monitor the constant flow of new quantum algorithms. Shor’s algorithm is the famous threat but others are percolating. He notes that many submitted algorithms broke down under testing but says not to make much of that as that’s the nature of the standards development process. He talks about pursuing cryptoagility and offers a few broad tips on preparation.

Moody also touched on geopolitcal rivalries amid what has been a generally collaborative international effort.

“There are some exceptions like China never trusting the United States. They’re developing their own PQC standards. They’re actually very, very similar to the algorithms [we’re using] but they were selected internally. Russia has been doing their own thing, they don’t really communicate with the rest of the world very much. I don’t have a lot of information on what they’re doing. China, even though they are doing their own standards, did have researchers participate in the process; they hosted one of the workshops in the field a few years back. So the community is small enough that people are very good at working together, even if sometimes the country will develop their own standards,” said Moody.

How soon quantum computers will actually be able to decrypt current RSA codes is far from clear, but early confidence that would be many decades has diminished. If you’re looking for a good primer on the PQS threat, he recommended the Quantum Treat Timeline Report released in December by the Global Risk Institute (GRI) as one (figures from its study below).

HPCwire: Let’s talk a little bit about the threat. How big is it and when do we need to worry

Dustin Moody: Well, cryptographers have known for a few decades that if we are able to build a big enough quantum computer, it will threaten all of the public key crypto systems that which we use today. So it’s a it’s a serious threat. We don’t know when a quantum computer would be built that’s large enough to attack current levels of security. There’s been estimates of 10 to 15 years, but you know, nobody knows for certain. We have seen progress in companies building quantum computers — systems from IBM and Google, for example, are getting larger and larger. So this is definitely a threat to take seriously, especially because you can’t just wait until the quantum computer is built and then say now we’ll worry about the problem. We need to solve this 10 to 15 years in advance to protect your information for a long time. There’s a threat of harvest-now-decrypt-later that helps you understand that.

HPCwire: Marco Pistoia, who leads quantum research for JPMorgan Chase, said he’d seen a study suggesting as few as 1300 or so logical qubits might be able to break conventional RSA code, although it would take six months to do so. That was a year ago. It does seem like our ability to execute Shor’s algorithm on these systems is improving, not just the brute force, but our cleverness in getting the algorithm to run.

Dustin Moody: Yep, that’s true. And it’ll take a lot of logical qubits. So we’re not there yet. But yeah, progress has been made. You have to solve the problem solved and migrate to new solutions before we ever get to that point,

HPCwire: We tend to focus on Shor’s algorithm because it’s a direct threat to the current encryption techniques. Are there others in the wings that we should be worried about?

Dustin Moody: There’s a large number of quantum algorithms that we are aware of, Shor being one of them, Grover’s being another one that has an impact on cryptography. But there’s plenty of other quantum algorithms that do interesting things. So whenever anyone is designing the crypto system, they have to take a look at all those and see if they look like they could attack the system in any way? There’s kind of a list of I don’t know, maybe around 15 or so that potentially people have to kind of look at him and figure out, do I need to worry about these.

HPCwire: Does NIST have that list someplace?

Dustin Moody: There was a guy at NIST who kept up such a list. I think he’s at Microsoft, now. It’s been a little while, but he maintained something called the Quantum Algorithms Zoo.

HPCwire: Let’s get back to the NIST effort to develop quantum-resistant algorithms. As I understand it, the process began being around 2016 has gone through this iterative process where you invite submissions of potential quantum resistant algorithms from the community, then test them and come up with some selections; there have been three rounds completed and in the process of becoming standards, with an ongoing fourth round. Walk me through the project and progress.

Dustin Moody: So these kinds of cryptographic competitions have been done in the past to select some of the algorithms that we use today. [So far] a widely used block cypher was selected through a competition. More recently a hash function. Back in 2016, we decided to do one of these [competitions] for new post quantum algorithms that we needed standards for. We let the community know about that. They’re all excited and we got 82 submissions of which 69 met kind of the requirements that we’d set out to be involved. Then we had a process that over six or seven years [during which] we evaluated them going through a period of rounds. In each round, we went further down to the most promising to advance the tons of work going on in there, both internally at NIST, and by the cryptographic community, doing research and benchmarks and experiments and everything.

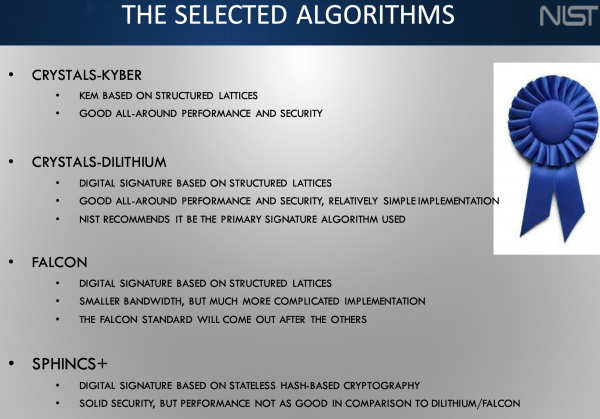

The third round had seven finalists and eight alternate concluded in July of 2022, where we announced items that we would be standardizing as a result, that included one encryption algorithm and three signature algorithms. We did also keep a few encryption algorithms on into a fourth round for further study. They weren’t quite ready to be selected for standardization. That fourth round is still ongoing and will probably end as this fall, and we’ll pick one or two of those to also standardize. We’ll have two or three encryption [methods] and three signatures as well.

HPCwire: It sounds like a relatively smooth process?

Dustin Moody: That process got a lot of attention from the community. A lot of the algorithms ended up being broken, some late in the process — that’s kind of the nature of how this thing works. That’s where we are now. We’re just about done writing the standards for the first ones that we selected, our expected date is publishing them this summer. The fourth round will end this fall, and then we’ll write standards for those that will take another year or two.

We also have ongoing work to select a few more digital signature algorithms as well. The reason for that is so many of the algorithms we selected are based on what are called lattices; they’re the most promising family, [with] good performance, good security. And for signatures, we had two based on lattices, and then one not based on lattices. The one that wasn’t based on lattices — it’s called SPHINCS+ — turns out to be bigger and slower. So if applications needed to use it, it might not be ideal for them. We wanted to have a backup not based on lattices that could get used easily. That’s what this ongoing digital signature process is about [and] we’re encouraging researchers to try and design new solutions that are not based on lattices that are better performing.

HPCwire: When NIST assesses these algorithms, it must look to see how many computational resources are required to run them?

Dustin Moody: There’s specific evaluation criteria that we look at. Number one is security. Number two is performance. And number three is this laundry list of everything else. But we work internally at NIST, we have a team of experts and try to work with cryptography and industry experts around the world who are independently doing it. But sometimes we’re doing joint research with them in the field.

Security has a wide number of ways to look at it. There’s the theoretical security, where you’re trying to create security proofs where you’re trying to say, ‘if you can break my crypto system, then you can break this hard mathematical problem.’ And we can give a proof for that and because that hard mathematical problem has been studied, that gives us a little bit more confidence. Then it gets complicated because we’re used to doing this with classical computers and looking at how they can attack things. But now we have to look at how can quantum computers attack things and they don’t yet exist. We don’t know their performance. capabilities. So we have to extrapolate and do the best that we can. But it’s all thrown into the mix.

Typically, you don’t end up needing supercomputers. You’re able to analyze how long would the attacks take, how many resources they take, if you were to fully tried to break the security parameters at current levels. The parameters are chosen so that it’s [practically] infeasible to do so. You can figure out, if I were to break this, it would take, you know, 100 years, so there’s no use in actually trying to do that unless you kind of find a breakthrough to find a different way. (See descriptive list of NIST strengths categories at end of article)

HPCwire: Do you test on today’s NISQ (near-term intermediate scale quantum) computers?

Dustin Moody: They’re too small right now to really have any impact in looking at how will a larger quantum computer fare against concrete parameters chosen at high enough security levels. So it’s more theoretical, when you’re figuring out how much resources it would take.

HPCwire: So summarizing a little bit, you think in the fall you’ll finish this last fourth round. Those would all be candidates for standards, which then anyone could use for incorporation into encryption schemes that would be quantum computer resistant.

Dustin Moody: That’s correct. The main ones that we expect to use were already selected in our first batch. So those are kind of the primary ones, most people will use those. But we need to have some backups in case you know, someone comes up with a new breakthrough.

HPCwire: When you select them do you deliberately have a range in terms of computational requirements, knowing that not everyone is going to have supercomputers at their doorstep. Many organizations may need to use more modest resources when running these encryption codes. So people could pick and choose a little bit based on the computational requirements.

Dustin Moody: Yes, there’s a range of security categories from one to five. Category Five has the highest security, but performance is impacted. So there’s a trade off. We include parameters for categories one, three, a five so people can choose the one that’s best suited for their needs.

HPCwire: Can you talk a little bit about the Migration to PQC project, which is also I believe in NIST initiative to develop a variety of tools for implementingPQC What’s your involvement? How is that going?

HPCwire: Can you talk a little bit about the Migration to PQC project, which is also I believe in NIST initiative to develop a variety of tools for implementingPQC What’s your involvement? How is that going?

Dustin Moody: That project is being run by NIST’s National Cybersecurity Center of Excellence (NCCoE). I’m not one of the managers but I attend all the meetings and I’m there to support what goes on. They’ve collaborated with…I think the list is up 40 or 50 industry partners and the list is on their website. It’s a really strong collaboration. A lot of these companies on their own would typically be competing with each but here, they’re all working for the common good of making the migration as smooth as possible, getting experience developing tools that people are going to need to do cryptographic inventories. That’s kind of one of the first steps that an organization is going to need to do. Trying to make sure everything will be interoperable. What lessons can we learn as we. Some people are further along than others and how can we share that information best? It’s really good to have weekly calls, [and] we hold events from time to time. Mostly these industry collaborators are driving it and talking with each other and we just kind of organize them together and help them to keep moving.

HPCwire: Is there any effort to build best practices in this area? Something that that NIST and these collaborators from industry and academia and DOE and DOD could all provide? It would be perhaps have the NIST stamp of authority on best practices for implementing quantum resistant cryptography.

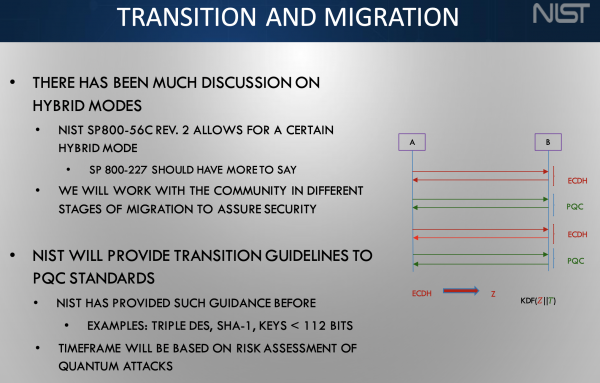

Dustin Moody: Well, the standards that my team is writing, and those are written by NIST and those are the algorithms that people will implement. Then they’ll also then get tested and validated by some of our labs at NIST. The migration project is producing documents, in a series (NIST SP 1800-38A, NIST SP 1800-38B, NIST SP 1800-38C) and those are updated from time to time, where they’re sharing what they’ve learned and putting best practice in this. They are NIST documents, written jointly with the NIST team and with these collaborators to share what they’ve got so far.

HPCwire: What can the potential user community do to be involved? I realize the project is quite mature, it’s been around for a while, and you’ve got lots of people who who’ve been involved already. Are we at the stage where the main participants are working with each other and NIST in developing these algorithms, and it’s now a matter of sort of monitoring the tools that come out.

Dustin Moody: I would say every organization should be becoming educated on understanding the quantum threat, knowing what’s going on with standardization, knowing that you’re going to need to migrate, and what that’s going to involve your organization. It’s not going to be easy and pain free. So planning ahead, and all that. If they want to join that that collaboration (Migration to PQC), people are still joining from time to time and it is still open if they have something that they’ve got to share. But for most organizations or groups, it’s going to be just trying to create your plan preparing for the migration. We want you to wait till the final standards are published, so you’re not implementing the something that’s 99% the final standard, we want you to wait until that’s there, but you can prepare now.

HPCwire: When will they be final?

Dustin Moody: Of the four that we selected, three of them. We put out draft standards a year ago, got public feedback, and have been revising since. The final versions are going to be published this summer. We don’t have an exact date, but it will, it’ll be this summer.

HPCwire: At that point, will a variety of requirements will come around using these algorithms, for example in the U.S. government and perhaps in industry requiring compliance?

Dustin Moody: Technically NIST isn’t a regulatory agency. So yes, US government can. I think the OMB says that all agencies need to use our standards. So the federal government has to use the standards that we use for cryptography, but we know that a wider audience industry in the United States and globally tends to use the algorithms that we standardized as well.

HPCwire: We’re in a world in which geopolitical tensions are real. Are we worried about rivals from China or Russia, or other competing nations not sharing their advances? Or is the cryptoanalyst community small enough that those kinds of things are not likely to happen because the people know each other?

Dustin Moody: There is a real geopolitical threat in terms of who gets the quantum computer quickest. If China develops that and they’re able to break into our cryptography, that’s a that’s a real threat. In terms of designing the algorithms and making the standards, it’s been a very cooperative effort internationally. Industry benefits when a lot of people are using the same algorithms all over the world. And we’ve seen other countries in global standards organizations say they’re going to use the algorithms that were involved in our process.

Dustin Moody: There is a real geopolitical threat in terms of who gets the quantum computer quickest. If China develops that and they’re able to break into our cryptography, that’s a that’s a real threat. In terms of designing the algorithms and making the standards, it’s been a very cooperative effort internationally. Industry benefits when a lot of people are using the same algorithms all over the world. And we’ve seen other countries in global standards organizations say they’re going to use the algorithms that were involved in our process.

There are some exceptions like China never trusting the United States. They’re developing their own PQC standards. They’re actually very, very similar to the algorithms [we’re using] but they were selected internally. Russia has been doing their own thing, they don’t really communicate with the rest of the world very much. I don’t have a lot of information on what they’re doing. China, even though they are doing their own standards, did have researchers participate in the process; they hosted one of the workshops in the field a few years back. So the community is small enough that people are very good at working together, even if sometimes the country will develop their own standards.

HPCwire: How did you get involved in cryptography? What drew you into this field?

Dustin Moody: Well, I love math and the math I was studying has some applications in cryptography, specifically, something called elliptic curves, and there’s crypto systems we use today that are based on the curve, which is this beautiful mathematical object that probably no one ever thought they would be of any use in the in the real world. But it turns out they are for cryptography. So that’s kind of my hook into cryptography.

I ended up at NIST because NIST has elliptic curve cryptography standards. I didn’t know anything about post quantum cryptography. Around 2014, my boss said, we’re going to put you in this project dealing with post quantum cryptography and I was like, ‘What’s this? I’ve no idea what this is.’ Within a couple of years, it kind of really took off and grew and has become this high priority for the United States government. It’s been a kind of a fun journey to be on.

HPCwire: Will the PQC project just continue or will it wrap up at some point?

Dustin Moody: We’ll continue for a number of years. We still have the fourth round to finish. We’re still doing this additional digital signature process, which will take several more years. But then again, every everything we do in the future needs to protect against quantum computers. So these initial standards will get published, they’ll be done at some point, but all future cryptography standards will have to take the quantum threat into account. So it’s kind of built in that we have to keep going for the future.

HPCwire: When you talk to the vendor community, they all say, “Encryption has been implemented in such a haphazard way across systems that it’s everywhere, and that in simply finding where it exists in all those things is difficult.” The real goal, they argue, should be to move to a more modular predictable approach. Is there a way NIST can influence that? Or the selection of the algorithms can influence that?

Dustin Moody: Yes, and no. It’s very tricky. That idea you’re talking about, sometimes the word cryptoagility gets thrown out there in that direction. A lot of people are talking about, okay, we’re going to need to migrate these algorithms, this is an opportunity to redesign systems and protocols, maybe we can do it a little bit more intelligently than we did in the past. At the same time, it’s difficult to do that, because you’ve got so many interconnected pieces doing so many things. So it’s tricky to do, but we are encouraging people and having lots of conversations like with the migration and PQC project. We’re encouraging people to think about this, to redesign systems and protocols when you’re designing your applications. Knowing I need to transition to these algorithms, maybe I can redesign my system so that if I need to upgrade again, at some point, it’ll be much easier to do. I can keep track of where my cryptography is, what happens when I’m using it, what information and protecting. I hope that we’ll get some benefit out of this migration, but it’s, it’s certainly going to be very difficult, complicated and painful as well.

HPCwire: Do you have an off the top of your head checklist sort of five things you should be thinking about now to prepare for post quantum cryptography?

Dustin Moody: I’d say number one, just know that the migration is coming. The United States government is mandating their agencies to it, but industry as well as going to need to be doing this migration. The migration is not going to be easy, it’s not going to be pain free. You should be educating yourself as to what PQC is, the whole quantum threat, and starting to figure out, where are you using cryptography, what information is protected with cryptography. As you noted, that’s not as easy as it should be. “Very often, you’re going to need to use sophisticated tools that are being developed to assist with that. Also talk to your vendors, your CIOs, your CEOs to make sure they’re aware and that they’re planning for budgets to do this. Just because a quantum computer [able to decrypt] isn’t going to be built for, who knows, maybe 15 years, they may think I can just put this off, but understanding that threat is coming sooner than than you realize is important.”

HPCwire: Thank you for your time!

In accordance with the second and third goals above (Submission Requirements and Evaluation Criteria for the Post-Quantum Cryptography Standardization Process), NIST will base its classification on the range of security strengths offered by the existing NIST standards in symmetric cryptography, which NIST expects to offer significant resistance to quantum cryptanalysis. In particular, NIST will define a separate category for each of the following security requirements (listed in order of increasing strength2 ):

1) Any attack that breaks the relevant security definition must require computational resources comparable to or greater than those required for key search on a block cipher with a 128-bit key (e.g. AES-128)

2) Any attack that breaks the relevant security definition must require computational resources comparable to or greater than those required for collision search on a 256-bit hash function (e.g. SHA-256/ SHA3-256)

3) Any attack that breaks the relevant security definition must require computational resources comparable to or greater than those required for key search on a block cipher with a 192-bit key (e.g. AES-192)

4) Any attack that breaks the relevant security definition must require computational resources comparable to or greater than those required for collision search on a 384-bit hash function (e.g. SHA-384/ SHA3-384)

5) Any attack that breaks the relevant security definition must require computational resources comparable to or greater than those required for key search on a block cipher with a 256-bit key (e.g. AES-256)

Editor’s note: This article first ran in HPCwire.

The post NIST Q&A: Getting Ready for the Post Quantum Cryptography Threat? You Should Be appeared first on Datanami.

0 Commentaires