IBM today rolled out its Power11 line of servers, the first new generation of IBM Power servers since 2021. The new servers offer an incremental performance boost over older Power servers. But perhaps more importantly, the Power11 boxes include features that will appeal to organizations trying to build and run AI workloads, including a Spyre Accelerator designed to boost AI inference workloads and energy efficiency gains.

Like the Power10, Power11 chips are based on 16-core dies, with 15 cores active at any time. Unlike the previous generation of chips, the extra core can be activated in case one of the other 15 fail, which will make these already reliable machines even more reliable. In fact, IBM goes so far as to boast that Power11 will deliver “zero planned downtime for system maintenance.”

On the RAM side of things, Power11 servers utilize OpenCAPI Memory Interface (OMI) channels and support differential DIMM (D-DIMM), which is exposed as DDR5 memory. IBM says Power11 servers get up to 55% better core performance compared to Power9 and up to 45% more capacity compared to Power10. Power11 boxes will also include the Spyre Accelerator, a system-on-a-chip, beginning in the fourth quarter.

The unveiled Power11 lineup includes: Power E1180, a full rack system with up to 256 cores and 64TB of RAM; Power E1150, a 4U server with up to 120 cores and 16TB of RAM; Power S1124, a 4U server with up to 60 cores and 8TB of RAM; and the Power S1122, a 2U server with up to 60 cores and 4TB of RAM. IBM will also make the S1124 and S1122 available in its IBM Cloud via the IBM Power Virtual Server.

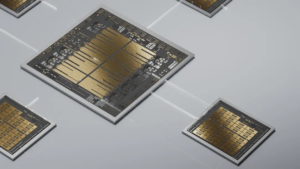

IBM first launched Spyre on the System Z mainframe, and Power11 marks the beginning of its usage on the Power side of the frame. Officially, it’s an ASIC that connects to the server bus via PCIe card. It features 32 AI accelerator cores and 128GB of LPDDR5 memory, and delivers 300 TOPS, or Tera Operations per Second, of computing power. IBM developed Spyre alongside its Telum II processor, both of which were launched at the Hot Chips 2024 conference and which featured heavily in the launch of IBM’s new z17 mainframe this April.

Built for AI Inference, Not Training

After missing the AI model training craze of the past three years, IBM is happy to have a new server that fits squarely into the AI plans of big enterprises and scientific organizations–with energy efficiency a big selling point, to boot.

That’s because, for the past three years, system vendors have been chasing the market for training ever-bigger AI models with ever-bigger GPU clusters. As Large Language Models (LLMs) approached the one trillion parameter mark, customers sought–and OEMs delivered–massive GPU-equipped compute clusters.

However, there was one server OEM who missed that AI training boat: IBM. Since the launch of Power10 in 2021, IBM has not sold a new system that integrates at the chip level with GPUs. The new Power11 chips, which is based on the Power10 design, also does not support GPU connections, which will not garner it much support among hyperscalers looking to train massive LLMs.

As luck would have it for IBM, AI hit the “scaling wall” in late 2024, putting a damper on the ever-growing size of LLMs and the ever-growing size of GPU clusters to train them. The emergence of a new class of reasoning models, such as DeepSeek, that can deliver the accuracy of traditional LLM models at a fraction of the size and training cost, caught some in the AI community flat-footed. In this case, the shift in emphasis from AI training to AI inference, as well as the emergence of agentic AI, benefits IBM.

All of the Power11 models feature energy efficiency gains, which also plays in IBM’s favor. For instance, the Power E1180 has 10% more rPerf per watt compared to the Power E1080, while the E1150 and S1124 offer 20% and 22% more rPerf per watt, respectively, over the E1050 and S1024. The Power S1122, meanwhile, offers up to 6.9X better performance per dollar for AI inferencing compared to the Power S102.

The power consumption of AI is an emerging concern. According to a 2024 Department of Energy report, data center load growth has tripled over the past decade and is projected to double or triple by 2028, with AI driving a big chunk of that growth.

Related Items:

AI Lessons Learned from DeepSeek’s Meteoric Rise

What Are Reasoning Models and Why You Should Care

Nvidia Touts Next Generation GPU Superchip and New Photonic Switches

The post IBM Targets AI Inference with New Power11 Lineup appeared first on BigDATAwire.

0 Commentaires