A new scientific paper from Google details the performance of its Cloud TPU v4 supercomputing platform, claiming it provides exascale performance for machine learning with boosted efficiency.

The authors of the research paper claim the TPU v4 is 1.2x–1.7x faster and uses 1.3x–1.9x less power than the Nvidia A100 in similar sized systems. The paper notes that Google has not compared TPU v4 to the newer Nvidia H100 GPUs due to their limited availability and 4nm architecture (vs. TPU v4’s 7nm architecture).

As machine learning models have grown larger and more complex, so have their compute resource needs. Google’s Tensor Processing Units (TPUs) are specialized hardware accelerators used for building machine learning models, specifically deep neural networks. They are optimized for tensor operations and can significantly boost efficiency in the training and inference of large-scale ML models. Google says the performance, scalability, and availability make TPU supercomputers the workhorses of its large language models like LaMDA, MUM, and PaLM.

The TPU v4 supercomputer contains 4,096 chips interconnected via proprietary optical circuit switches (OCS), which Google claims are faster, cheaper, and utilize less power than InfiniBand, another popular interconnect technology. Google claims its OCS technology is less than 5% of the TPU v4’s system cost and power, stating it dynamically reconfigures the supercomputer interconnect topology to improve scale, availability, utilization, modularity, deployment, security, power, and performance.

Google engineers and paper authors Norm Jouppi and David Patterson explained in a blog post that thanks to key innovations in interconnect technologies and domain-specific accelerators (DSAs), Google Cloud TPU v4 enabled a nearly 10x leap in scaling ML system performance over TPU v3. It also boosted the energy efficiency by approximately 2-3x compared to contemporary ML DSAs and reduced CO2e by approximately 20x over DSAs in what the company calls typical on-prem datacenters.

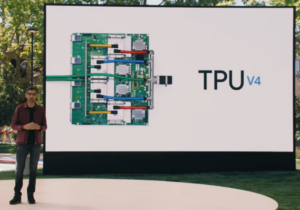

The TPU v4 system has been operational at Google since 2020. The TPU v4 chip was unveiled at the company’s 2021 I/O developer conference. Google says the supercomputers are actively used by leading AI teams for ML research and production across language models, recommender systems, and other generative AI.

Regarding recommender systems, Google says its TPU supercomputers are also the first with hardware support for embeddings, a key component of Deep Learning Recommendation Models (DLRMs) used in advertising, search ranking, YouTube, and Google Play. This is because each TPU v4 is equipped with SparseCores, which are dataflow processors that accelerate models that rely on embeddings by 5x–7x but use only 5% of die area and power.

One-eighth of a TPU v4 pod from Google’s ML cluster located in Oklahoma, which the company claims runs on ~90% carbon-free energy. (Source: Google)

Midjourney, a text-to-image AI startup, recently selected TPU v4 to train the fourth version of its image-generating model: “We’re proud to work with Google Cloud to deliver a seamless experience for our creative community powered by Google’s globally scalable infrastructure,” said David Holz, founder and CEO of Midjourney in a Google blog post. “From training the fourth version of our algorithm on the latest v4 TPUs with JAX, to running inference on GPUs, we have been impressed by the speed at which TPU v4 allows our users to bring their vibrant ideas to life.”

TPU v4 supercomputers are available to AI researchers and developers at Google Cloud’s ML cluster in Oklahoma, which opened last year. At nine exaflops of peak aggregate performance, Google believes the cluster is the largest publicly available ML hub that operates with 90% carbon-free energy. Check out the TPU v4 research paper here.

Related Items:

Google Cloud Bolsters Data, Analytics, and AI Offerings

Google Cloud’s 2023 Data and AI Trends Report Reveals a Changing Landscape

Partners Line Up for Google Cloud Ready for AlloyDB Designation

The post Google Claims Its TPU v4 Outperforms Nvidia A100 appeared first on Datanami.

0 Commentaires