The last year has seen an explosion in LLM activity, with ChatGPT alone surpassing 100 million users. And the excitement has penetrated board rooms across every industry, from healthcare to financial services to high-tech. The easy part is starting the conversation: nearly every organization we talk to tells us they want a ChatGPT for their company. The harder part comes next: “So what do you want that internal LLM to do?”

As sales teams love to say: “What’s your actual use case?”, but that’s where half of the conversations grind to a halt. Most organizations simply don’t know their use case.

ChatGPT’s simple chat interface has trained the first wave of LLM adopters in a simple interaction pattern: you ask a question, and get an answer back. In some ways, the consumer version has taught us that LLMs are essentially a more concise Google. But used correctly, the technology is much more powerful than that.

Having access to an internal AI system that understands your data is more than a better internal search. The right way to think about is not “a slightly better Google (or heaven forbid, Clippy) on internal data”. The right way to think about them is as a workforce multiplier. Do more by automating more, especially as you work with your unstructured data.

In this article, we’ll cover some of the main applications of LLMs we see in the enterprise that actually drive business value. We’ll start simple, with ones that sound familiar, and work our way to the bleeding edge.

Top LLM Use Cases in the Enterprise

We’ll describe five categories of use cases; for each, we’ll explain what we mean by the use case, why LLMs are a good fit, and a specific example of an application in the category.

The categories are:

- Q&A and search (ie: chatbots)

- Information extraction (creating structured tables from documents)

- Text classification

- Generative AI

- Blending traditional ML with LLMs – personalization systems are one example.

For each, it can also be helpful to understand if solving the use case requires the LLM to change its knowledge – the set of facts or content its been exposed to, or reasoning – how it generates answers based on those facts. By default, most widely used LLMs are trained on English language data from the internet as their knowledge base and “taught” to generate similar language out.

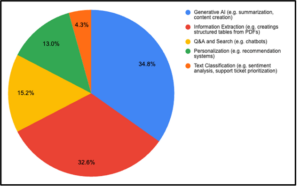

Over the past three months, we surveyed 150 executives, data scientists, machine learning engineers, developers, and product managers at both large and small enterprises about their use of LLMs internally. That, mixed with the customers we work with daily, will drive our insights here.

Self-reported use case from a survey of 150 data professionals

Use Case #1: Q&A and Search

Candidly, this is what most customers first think of when they translate ChatGPT internally: they want to ask questions over their documents.

In general, LLMs are well-suited to this task as you can essentially “index” your internal documentation and use a process called Retrieval Augmented Generation (RAG) to pass in new, company-specific knowledge, to the same LLM reasoning pipeline.

There are two main caveats organizations should be aware of when building a Q&A system with LLMs:

- LLMs are non-deterministic – they can hallucinate, and you need guardrails on either the outputs or how the LLM is used inside your business to safeguard against this.

- LLMs aren’t good at analytical computation or “aggregate” queries – if you gave an LLM 100 financial filings and asked “which company made the most money” requires aggregating knowledge across many companies and comparing them to get a single answer. Out-of-the-box, it will fail but we’ll cover strategies on how to tackle this in use case #2.

Example: Helping scientists gain insights from scattered reports

One nonprofit we work with is an international leader in environmental conservation. They develop detailed PDF reports for the hundreds of projects they sponsor annually. With a limited budget, the organization must carefully allocate program dollars to projects delivering the best outcomes. Historically, this required a small team to review thousands of pages of reports. There aren’t enough hours in the day to do this effectively. By building an LLM Q&A application on top of its large corpus of documents, the organization can now quickly ask questions like, “What are the top 5 regions where we have had the most success with reforestation?” These new capabilities have enabled the organization to make smarter decisions about their projects in real time.

Use Case #2: Information Extraction

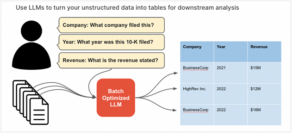

It’s estimated that around 80% of all data is unstructured, and much of that data is text contained within documents. The older cousin of question-answering, information extraction is intended to solve the analytical and aggregate enterprises want to answer over these documents.

The process of building effective information extraction involves running an LLM over each document to “extract” relevant information and construct a table you can query.

Example: Creating Structured Insights for Healthcare and Banking

Information extraction is useful in a number of industries like healthcare where you might want to enrich structured patient records with data from PDF lab reports or doctors’ notes. Another example is investment banking. A fund manager can take a large corpus of unstructured financial reports, like 10Ks, and create structured tables with fields like revenue by year, # of customers, new products, new markets, etc. This data can then be analyzed to determine the best investment options. Check out this free example notebook on how you can do info extraction.

Use Case #3: Text Classification

Usually, the domain of traditional supervised machine learning models, text classification is one classic way high-tech companies are using large language models to automate tasks like support ticket triage, content moderation, sentiment analysis, and more. The primary benefit that LLMs have over supervised ML is the fact that they can operate zero-shot, meaning without training data or the need to adjust the underlying base model.

If you do have training data as examples you want to fine-tune your model with to get better performance, LLMs also support that capability out of the box. Fine-tuning is primarily instrumental in changing the way the LLM reasons, for example asking it to pay more attention to some parts of an input versus others. It can also be beneficial in helping you train a smaller model (since it doesn’t need to be able to recite French poetry, just classify support tickets) that can be less expensive to serve.

Example: Automating Customer Support

Forethought, a leader in customer support automation, uses LLMs for a broad-range of solutions such as intelligent chatbots and classifying support tickets to help customer service agents prioritize and triage issues faster. Their work with LLMs is documented in this real-life use case with Upwork.

Use Case #4: Generative Tasks

Venturing into the more cutting-edge are the class of use cases where an organization wants to use an LLM to generate some content, often for an end-user facing application.

You’ve seen examples of this before even with ChatGPT, like the classic “write me a blog post about LLM use cases”. But from our observations, generative tasks in the enterprise tend to be unique in that they usually look to generate some structured output. This structured output could be code that is sent to a compiler, JSON sent to a database or a configuration that helps automate some task internally.

Structured generation can be tricky; not only does the output need to be accurate, it also needs to be formatted correctly. But when successful, it is one of the most effective ways that LLMs can help translate natural language into a form readable by machines and therefore accelerate internal automation.

Example: Generating Code

In this short tutorial video, we show how an LLM can be used to generate JSON, which can then be used to automate downstream applications that work via API.

Use Case #5: Blending ML and LLMs

Authors shouldn’t have favorites, but my favorite use case is the one we see most recently from companies on the cutting edge of production ML applications: blending traditional machine learning with LLMs. The core idea here is to augment the context and knowledge base of an LLM with predictions that come from a supervised ML model and allow the LLM to do additional reasoning on top of that. Essentially, instead of using a standard database as the knowledge base for an LLM, you use a separate machine learning model itself.

A great example of this is using embeddings and a recommender systems model for personalization.

Example: Conversational Recommendation for E-commerce

An e-commerce vendor we work with was interested in creating a more personalized shopping experience that takes advantage of natural language queries like, “What leather men’s shoes would you recommend for a wedding?” They built a recommender system using supervised ML to generate personalized recommendations based on a customer’s profile. The values are then fed to an LLM so the customer can ask questions with a chat-like interface. You can see an example of this use case with this free notebook.

The breadth of high-value use cases for LLMs extends far beyond ChatGPT-style chatbots. Teams looking to get started with LLMs can take advantage of commercial LLM offerings or customize open-source LLMs like Llama-2 or Vicuna on their own data within their cloud environment with hosted platforms like Predibase.

About the author: Devvret Rishi is the co-founder and Chief Product Officer at Predibase, a provider of tools for developing AI and machine learning applications. Prior to Predibase, Devvret was a product manager at Google and was a Teaaching Fellow for Harvard University’s Introduction to Artificial Intelligence class.

Related Items:

OpenAI Launches ChatGPT Enterprise

GenAI Debuts Atop Gartner’s 2023 Hype Cycle

The Boundless Business Possibilities of Generative AI

The post What Does ChatGPT for Your Enterprise Really Mean? appeared first on Datanami.

0 Commentaires