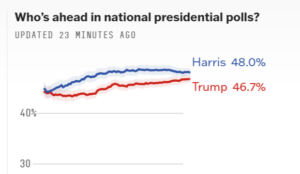

Less than a week before the 2024 presidential election, Americans are closely following the polls to see whether Kamala Harris or Donald Trump becomes the 48th president of the United States. But can we trust the polls? After failing to accurately predict the results of 2016 and 2020 elections, it remains an open question.

After decades of relatively accurate political polling, Americans were surprised to learn on November 9, 2016 that the majority of polls, which predicted a clear victory for Hillary Clinton, turned out to be wrong. While the primary reason for the miss was clear–in constructing their statistical samples, pollsters incorrectly predicted who would actually turn out to vote–the underlying causes driving this phenomenon were not clear, and have been the subject of much debate.

One of the possible causes is a phenomenon that’s been termed the “Shy Trump Voter” effect, which states that social stigma leads would-be Trump voters to hide their political prediliction, a phenomenon that first appeared with “Shy Tory Voter” that led to the surprise Brexit vote in the UK in early 2016.

In addition to avoiding pollsters, Shy Trump Voters may simply lie to them. This is a source statistical of error that has been estimated to skew the results of polls by up to 4%–a substantial amount when elections are close.

There’s plenty of evidence that suggests less politically engaged voters, as Trump supporters tend to be, are less likely to engage with surveys, writes Nate Cohn, the chief political analyst for the New York Times.

“Pollsters have known for decades that the least engaged, least political voters are least likely to respond to surveys,” Cohn wrote in a recent story on the state of political polling. “This may even sound obvious: A political junkie would naturally be more excited to take a poll than someone without any interest in politics.”

The Shy Trump Voter effect is a well-known source of error. But there are other sources that must be accounted for, says Steve Bennett, senior director of the Office of Public Readiness and Corporate Planning at SAS.

“Some organizations that combined live-interview and automated-dialer calls, observed that Trump did better when voters were sharing their voting intention with a recorded voice rather than a live one,” Bennett says. “The effect seemed to be more pronounced for female Trump supporters, who seemed to be less likely to say they would be comfortable talking to a pollster about their vote.

Cohn says the Shy Trump Voter is the chief driver of statistical error in one of his two theories for why political polling continues to struggle. Dubbed the Unified Theory, pollsters simply cannot properly represent politically disengaged voters in the construction of political polls, he says. And when these Shy Trump Voters actually show up for elections–primarily presidential elections every four years, where Republicans tend to do better (while Democrats tend to outperform in mid-term elections)–there’s not much they can do to counter it.

Cohn’s other theory, which he dubs the Patchwork Theory, includes other factors as well, including shifting demographics and the impact of the Covid-19 pandemic.

In 2020, many people followed government orders to stay at home to minimize their own exposure to the virus and lower transmission rates in the community. That had the effect of jacking up the response rate for polls, which pollsters initially cheered, Cohn writes.

But as the year wore on, many people started to return to work and therefore weren’t as available to answer poll questions, whether via telephone calls, on the Web, email, or even text-based polling. And it turns out that the people who were more likely to return to work were also more likely to vote for Trump, he writes.

“The (overly simplistic but plausible) story here is that Democrats were free and available to take surveys all day, lonely and grateful to speak with a human, enraged by Mr. Trump and the pandemic, all while Republicans tended to be out living their lives,” Cohn says.

The pandemic also had other impacts, including on the accuracy of landline telephones for polling, Cohn writes. When landlines were ubiquitous among Americans, they were a reliable method to achieve a statistically random sample. In 2020, when fewer than 40% of Americans had landlines, one survey that relied on landlines over-represented Joe Biden’s lead by 13% releative to the actual election result, Cohn writes. Today, fewer than 25% of American homes have landlines.

Cohn’s Patchwork Theory also factors in educational attainment. Prior to 2016, many state polls didn’t correct for whether a person reported that they had a college degree because there was no known correlation between educational attainment and voting, he writes. But a correlation had emerged by 2016, and that led the 2016 state polls to err by having too big of a mix of college graduates, which skewed the polling results toward Hillary Clinton by 4 percentage points, he writes.

Armed with this information, the state polls corrected for educational attainment, Cohn writes (the national polls had already made the adjustment). But many polls still got it wrong in 2020. In fact, many polls did even worse in 2020 than in 2016.

In-person versus mail-in voting is another wild card in the 2024 election (Vladirina32/Shutterstock)

“Despite all the methodology improvements between 2016 and 2020, polling seemed to get even less accurate,” SAS’s Bennett tells BigDATAwire via email. “In the run-up to the 2020 election, as much as 12% of Republican voters said they would not report their true opinions about candidates to pollsters. Despite the fact that Joe Biden prevailed, post-election analyses showed that pre-election polling overstated support for Joe Biden by as much as 4%, the largest polling error in a generation.”

So where do we stand in 2024? Do pollsters, statisticians, and data scientists have the information necessary to correct their past errors and create voting models that are true representations of the electorate? Unfortunately, the answer appears to be “probably not.”

It’s telling that Nate Silver, the famously meticulous statistician who correctly predicted the outcome of the 2008 presidential election in 49 of 50 states, is resorting to gut instincts.

“In an election where the seven battleground states are all polling within a percentage point or two, 50-50 is the only responsible forecast,” he wrote in a recent New York Times opinion piece. “My gut says Donald Trump.”

Despite the attention given to 2016 and 2020 polling errors, Silver, who founded the now defunct FiveThirtyEight website, isn’t optimistic they have been fully addressed, which renders the polls predictably unreliable. “It’s extremely hard to predict the direction of polling errors,” he writes.

Silver doesn’t fully buy into the Shy Trump Voter effect, noting that conservatives don’t show any persistent under representation in elections in other countries, such as Marine Le Pen’s eleciton in France. However, he notes that nonresponse bias “can be a hard problem to solve.”

“Trump supporters often have lower civic engagement and social trust, so they can be less inclined to complete a survey from a news organization,” writes Silver, who left FiveThirtyEight last year and now tracks political races at Silver Bulletin. “Pollsters are attempting to correct for this problem with increasingly aggressive data-massaging techniques, like weighting by educational attainment (college-educated voters are more likely to respond to surveys) or even by how people say they voted in the past. There’s no guarantee any of this will work.”

There’s also the possibility that the steps pollsters take to correct for various sources of bias could inadvertently end up skewing the results, leading to Harris outperforming the polls, he writes.

“Polls are increasingly like mini-models, with pollsters facing many decision points about how to translate nonrepresentative raw data into an accurate representation of the electorate,” Silver writes. “If pollsters are terrified of missing low on Mr. Trump again, they may consciously or unconsciously make assumptions that favor him.”

SAS’s Bennett isn’t convinced that pollsters will finally nail a presidential poll after flubbing the last two.

“So where do we stand in 2024? Unfortunately, I’m not sure we’re much better than we were in 2016 and 2020,” he writes. “And that’s not because polling methodologies aren’t getting better, but rather because the input data seems to have an inherent bias that continues to undercount Trump supporters.”

The Shy Trump Voter effect is still a factor, as are other sampling methodologies that depress Trump’s true support, Bennett says. That’s a tough problem to overcome.

“I’m not brave enough to say that Mr. Trump has 4% more support than the numbers show today, but I still think there is an undercount of Trump supporters in 2024 pre-election polling,” he says. “Foreign betting markets sure seem like they ‘know something we don’t,’ having a much higher win probability for Mr. Trump than the polls or statistical models show.

“It will be interesting to see if this bias is a lasting aspect of presidential election polling into the 2028 cycle, or if it’s specific to Mr. Trump as a candidate,” Bennett continues. “Unfortunately for all the great statistical models from FiveThirtyEight, Nate Silver, and others, it may be another election of ‘garbage in, garbage out’ for the polls.”

Related Items:

2020 Election: Five Ways to Improve the Accuracy of Polls

Systemic Data Errors Still Plague Presidential Polling

Six Data Science Lessons from the Epic Polling Failure

The post After Misses in 2016 and 2020, Have We Fixed Political Polling in 2024? appeared first on BigDATAwire.

0 Commentaires